Resources › AI for Internal Communication: Safe & Effective Use

Artificial intelligence (AI) is a sophisticated technology enabling machines to simulate human-like intelligence.

It's become increasingly important to businesses due to its capacity to adeptly tackle complex problems, generate innovative solutions, forecast outcomes, and provide invaluable strategic recommendations.

How can internal communication specialists leverage AI to enhance their tasks – while staying vigilant about security implications? We'll explore some common tools, show you some ways AI can streamline internal communications, and highlight five critical limitations of AI.

Which AI tools best suit internal communications teams?

Here are a few AI tools that internal communicators should know:

| AI tool | Description | Best for... |

| ChatGPT | OpenAI's free-to-use AI-powered language model for generating text that gained millions of users within its first week of release. |

All-rounder generative AI. Great for engaging in human-like conversations, answering a wide range of questions on several topics, generating

a large variety of content (social media posts, emails, refining messages etc.) Compared to Google's Bard, it has many more integration capabilities. |

| Bard | Google's AI tool. It's just like ChatGPT, only it leverages Google's resources and database. | Organisation who uses other Google apps (Gmail, Drive etc.) Bard uses extensions to retrieve real-time information from your apps without leaving the chat. Unlike ChatGPT, it can show images from Google results, and analyse images and create content based on it. |

| Microsoft 365 Copilot | Microsoft 365's AI tool that's integrated with the Microsoft apps you use every day (e.g. Word, Excel, PowerPoint, Outlook, Teams and Edge). It combines your organisational data with their AI models. |

Organisations who are using the Microsoft ecosystem or want to safely trial AI tools. Microsoft promises better productivity and creativity

while using business data in a secure, compliant and privacy-preserving way. Organisational data won't be used to train their AI and your data is accessible only by authorised users within your organisation (unless you explicitly consent to other access or use). |

| GrammarlyGo | Developed by Grammarly as a writing assistant tool for consistent grammar and style. | Improving the quality and professionalism of written communications. |

| Notion AI | Developed by productivity and note-taking app Notion. | If your organisation has a Notion workspace, it can help you transform text, automate simple tasks, and generate new content. |

| DALL-E2 | OpenAI's tool for generating images from textual prompts. | Beginners to AI generated imagery. |

| Midjourney | Create high-quality images from simple text-based prompts, without any specialised hardware or software. It works entirely through the chat app Discord. | Internal communications teams that use Discord, as Midjourney only runs off Discord. |

| Stable Diffusion | It generates photo-realistic images via a text or image prompt. | Internal comms teams who have intermediate experience with image generators, as it requires some know-how to maximise the features and customisability. |

How can you use AI to improve internal communications?

Hate opening up a document only to stare at a blank screen, not knowing where to start? Thanks to AI, the dilemma of a blank page is no longer a problem. We've shared some ChatGPT prompts for internal communicators, but here are some ways internal communications specialists can use AI:

- Repurpose content. Make one long-form PDF go the extra mile with AI: generate many rewordings of the same key messages to keep things fresh; extract snackable summaries to share on digital signage screens; reformat content into different styles – like turning a lengthy safety policy into a rap to add fun into your comms!

- Translate comms for global teams. Integrate AI language translation tools to encourage effective communications for global and diverse teams.

- Create content at scale. Quickly generate social media captions, blog articles and first drafts for new policies.

- Automate news updates. Use an AI content curation tool to pull relevant news, industry updates and internal announcements; schedule it to go out through communication channels such as your digital signage screens.

- Develop a knowledge base for common queries. Select and train the chatbot to answer common questions during onboarding (such as company policies, benefits, or procedures); routine questions (such as payroll or IT support); training and development (such as questions for apps and tools like Vibe); health and safety guidelines (such as protocols, remote work best practice, or crisis management procedures).

- Personalise communications. Use an AI platform that can analyse employee data to develop personalised messaging based on their preferences and behaviour patterns.

- Choose the best way to present information. Let AI show you different ways to present information and data. For example, it could suggest turning your cybersecurity policy into fun quiz questions to reinforce learning.

- Generate custom imagery. Use AI image generation tools to quickly create high quality imagery to support key messages. No more spending hours hunting for the right stock photo.

Exploring the limitations of AI

With such an impressive and growing list of what AI can do for internal communications teams, it may cause you to sweat. But don't worry – AI is not a replacement for seasoned communicators like yourself. Sure, it can create content at scale, but you'll still need to ensure it's giving good quality output. Here are five critical limitations of AI.

1. How data is collected, stored and used

Artificial intelligence collects data using open-source datasets, synthetic datasets, exporting data from one algorithm to another, or from primary/custom data.

The challenge of AI data collection and storage is that the AI model is pulling data from many sources – some data may even include someone's copyrighted artwork, content, or other intellectual property without their consent. This problem is compounded as the models use this data for training and fine-tuning – it can get so complex that not even AI vendors can confidently say what data is being used.

Using AI tools like ChatGPT or Midjourney requires you to input your own data in the form of queries. Your queries feed into the AI model,

becoming part of its training dataset, and it might show up as outputs to other users' queries. This becomes a huge issue if users share

sensitive information into the AI tool, like some staff at

Samsung did.

2. Bias within AI models

AI tools depend on their training data to generate content. The principle "garbage in, garbage out" applies here: the output quality matches the quality and quantity of data the AI model receives.

Bias is found in an AI model's initial training data, the algorithm, or the predictions the algorithm produces. As a result, it can reflect and perpetuate human biases within a society, including historical and current social inequality.

Flawed training data can result in algorithms that repeatedly produce errors, unfair outcomes, or even amplify the bias inherent in the flawed data. Algorithmic bias can also be caused by programming errors, such as a developer unfairly weighting factors in algorithm decision-making based on their own conscious or unconscious biases. As the AI algorithm learns and changes over time, this evolution can introduce bias.

3. The quality and accuracy of data

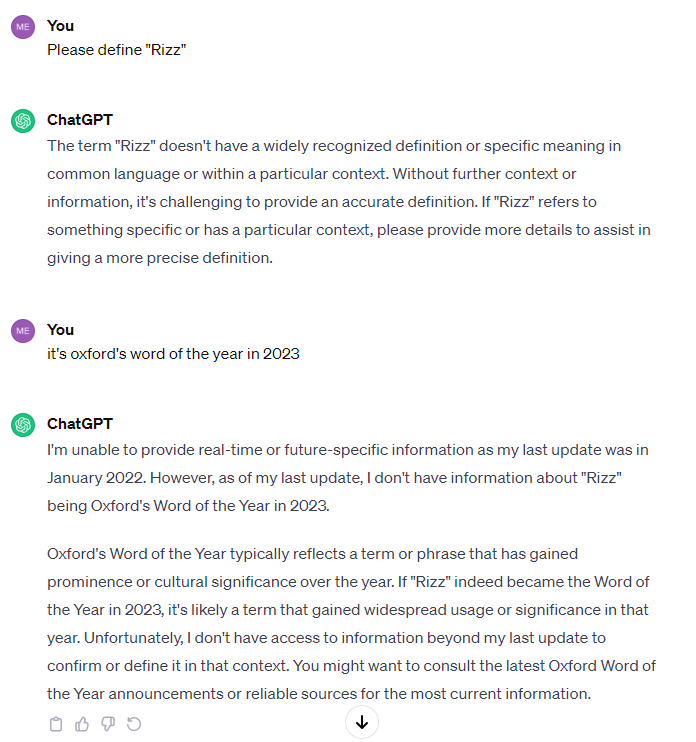

AI tools can sometimes struggle to grasp context, nuances, and cultural references. For example, ChatGPT is only trained up to 2022 as of late; it won't get the most up to date cultural references. Here I've asked it to define Oxford's 2023 Word of the Year, “rizz”, but it looks like it's beyond ChatGPT's capacity:

Another instance that casts doubts on the reliability of AI tools is that they can make up facts and produce inaccurate information, also known as AI hallucinations. It looks like we still need a human eye to check for errors, fact-check information, and enhance the quality of the content.

4. Data privacy

AI systems often rely on vast data to train their algorithms and improve performance. This data can include personal information and sensitive information.

The growing uptake of AI tools raises concerns about how the data is being used and who has access to it.

Some AI tools like Microsoft Copilot integrates with an organisation's data in order to provide better answers. Microsoft have made it clear in their policies that organisation's data won't be used to train the AI model, and just like the data access controls you're used to in other Microsoft apps, their permissions model can ensure that data won't unintentionally leak between users, groups and tenants.

As a rule of thumb, assume that any time you ask an AI something, your input will be cached and processed. Whether that data is stored or

used for advertising or learning purposes is up to the developers and their privacy policy.

Like with anything on the internet, it's important to think about where and how to use these tools before giving them our personal

information. AI is a new field, so the rules and policies for it are still developing worldwide. Laws such as GDPR and California Consumer

Privacy Act will regulate how AI vendors collect, store, and process data.

One way AI vendors are encouraged to maintain data privacy is through data anonymisation and de-identification of personal data. It relies on various methods and techniques to balance safeguarding personal information while keeping data useful. This prevents data misuse and mitigates data breaches.

3 tips to be a responsible AI user

- Check the privacy policy. A chore but worth the effort of knowing what you're agreeing to.

- Be mindful of what you share. For text input, avoid including personal, private or sensitive information (e.g. financial results, your address, bank details)

- Review your settings. Explore the privacy and security controls for your AI tool of choice. For ChatGPT, you can stop it from using your conversations to train its models, but you'll lose access to the chat history.

5. Picking safe AI tools

All the talk about data breaches and misuse can be quite scary. These are some tips that can help you confidently assess risk and choose a suitable AI vendor for your needs.

- Assess the vendor's own AI governance. Ask for evidence of AI governance processes, defined roles & responsibilities for AI development, ethical codes of AI conduct, and any conformity to AI governance standards.

- Ask for evidence substantiating performance claims. The core AI's model structure and training techniques can be highly sensitive, but evidence of quality or performance should not be proprietary

- Understand the source and collection methods to understand what population the dataset represents how the data was filtered for low quality or inappropriate content, and how it gets updated and kept fresh

- Enquire about monitoring and incident reporting process. A responsible AI vendor will have robust response times, be continuously improving, as well as provide a clear path for reporting incidents

- Ask vendors about their secure data handling procedures, and ensure it meets your own internal standards

Vibe and Artificial Intelligence

Vibe is embarking on an AI journey to take communication teams to new heights of efficiency while amplifying their impact within the Vibe platform.

The first phase of our AI journey, our team will create an AI assistant that can streamline tasks such as transforming lengthy content into easily digestible bite size messages, spark creative content ideas, dynamically generating images to reinforce messages, offering Vibe template recommendations tailored to specific content needs, and facilitating language translation for global teams.

The second phase will grow our AI assistant, with a focus on fully automating the slide setup process. This involves transforming content sourced from the AI engine, Word, PDF, or PowerPoint and working in harmony with Vibe's extensive template library to ensure visually compelling, bite-sized content is delivered on-brand and in a way people learn and retain information.